Azure Functions, Neo4j and Dependency Injection

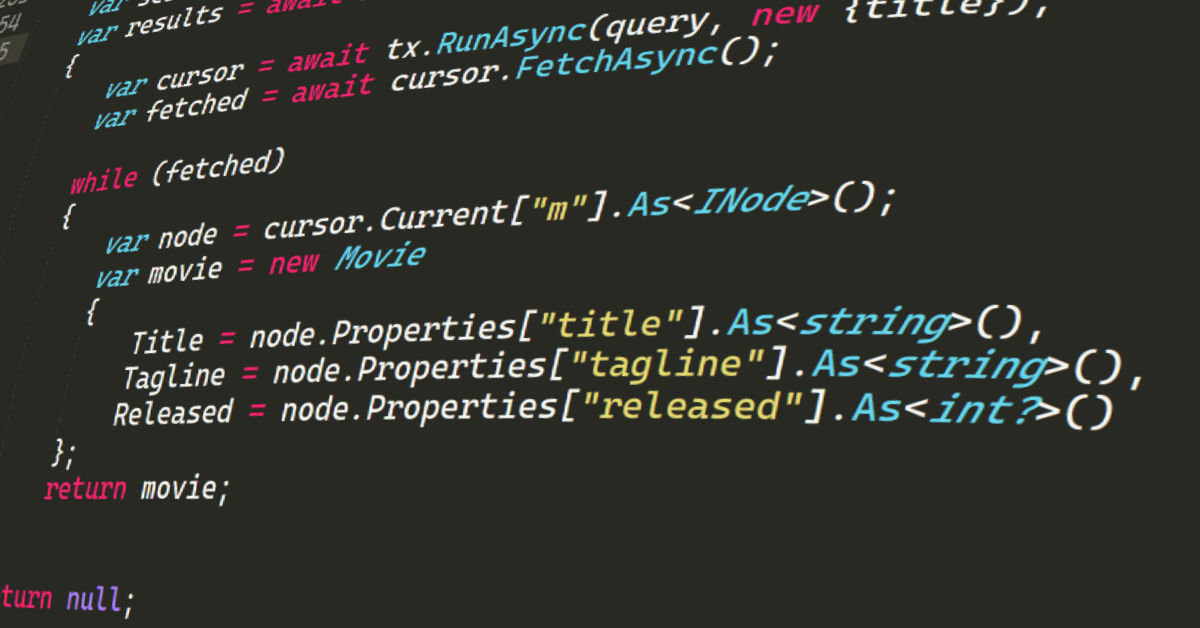

TL;DR; – It’s on GitHub: https://github.com/cskardon/Neo4jDriverWithAzureFunctionsDI You, just now I know what you’re thinking, that is an exciting headline, and you’re right! Back in May… May 2018 (!!!) I wrote a blog post about using the Neo4j Driver with Azure Functions – and well, things have moved on a bit since then. Largely the code…